Combining popular voice recognition technologies with conversational AI opens the door for incredible potential in the enterprise space. Here’s everything you need to know about what is possible today…

The race to establish speech and voice recognition as the dominant interface for smart homes is in full swing. Tech giants like Google and Amazon are pouring resources into voice technology, aiming to make their smart assistants as indispensable as computers and smartphones.

Just as Google’s search algorithm reshaped information consumption and revolutionized advertising, AI-driven voice computing is poised to drive a similar shift—not just in homes but across the enterprise landscape. As smart home devices and voice-powered services become more accessible, adoption is accelerating. At the same time, rapid advancements in AI are turning once-futuristic applications into reality with impressive results.

A benchmarking report from Cognylitica recently found that voice assistants still require a lot of work before even half of their responses are acceptable - due, in part, to early voice recognition programs being only as good as the programmers that wrote them. But things are getting better. Thanks to permanent connections to the internet and data centers, the complex mathematical models that power voice recognition technology are able to sift through huge amounts of data that companies have spent years compiling in order to learn and recognize different speech patterns.

They can interpret vocabulary, regional accents, colloquialisms and the context of conversations by analyzing everything from recordings of call center agents talking with customers to thousands of daily interactions with a digital assistant.

In this article, we will address the specifics of how the technology works in relation to conversational AI, as well as the challenges and our recommended approach for integrating AI voice recognition technology into a virtual agent.

How it works

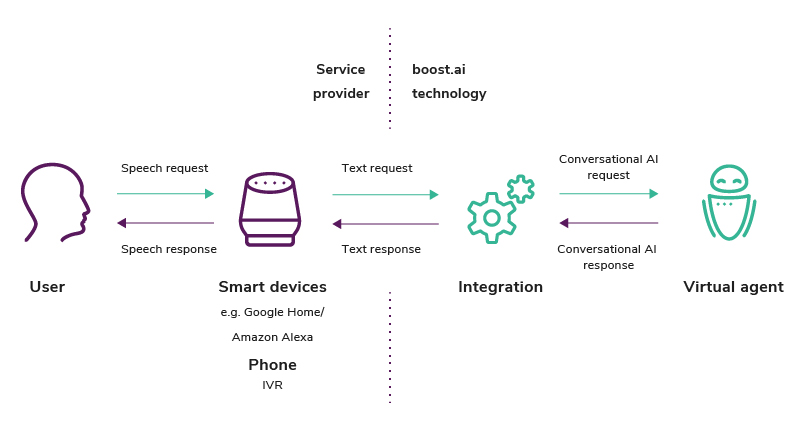

A solid conversational AI foundation is crucial for successful AI voice recognition. The speech layer is added on top of the virtual agent, acting as a regular integration, similar to third-party apps.

This allows boost.ai to stay vendor-agnostic, supporting the best AI voice recognition technology available.

The process is straightforward: it works by transcribing speech into text, which is then sent to the virtual agent and (via conversational AI) is predicted to the right intent. When the correct intent is identified, we then forward the text that is communicated orally by the speech interface and presented to the user.

Applicable technologies

Today’s market offers a variety of voice technologies, including Google Assistant, Amazon Alexa, Microsoft Cortana, and others. Each is competing for dominance, aiming to become the primary way we interact with the internet in the future.

Boost.ai currently integrates with Google Home and Amazon Alexa. We also support IVR using Twilio.

Challenges

We have identified three major pain points that need to be overcome in order for voice to be effectively integrated with conversational AI:

Presenting external links, media and buttons

The majority of chatbots and virtual agents are heavily based on either; action links (i.e. buttons) used to move a conversation along, or; external links that take a customer away from a conversation entirely. These pose a difficulty for voice-only interactions, as do enrichments such as images and videos.

Asking Google Home “How do I apply for a loan?” only to be told “Click here to do it” doesn’t make much sense in a voice setting and will ultimately lead to a failed interaction.

(Note: products such as Google’s Nest Hub and Amazon’s Echo Show combine smart screen technology with voice integration to tackle some of these issues, however, the adoption rate of these products is drastically lower than their voice-only alternatives.)

Deciphering subtle linguistic nuances

While AI voice recognition technology continues to develop, it is still drastically less robust than its chat-based counterpart. At boost.ai, our high-performing modules boasts 2,000+ intents, across enterprise-specific domains such as banking, insurance, telecom and the public sector.

Voice assistants, on the other hand, are also often unable to distinguish between the subtle linguistic nuances that are common in speech. For instance, understanding the difference in the phrases “I have bought a car” and “I want to buy a car” can already be a difficult exercise for conversational AI and adding speech on top only increases the complexity.

Keeping customers glued to the happy path

Customers will not always follow what’s known as “the happy path” - i.e. they don’t follow the conversation flow to the endpoint you desire. It’s only human nature to jump back and forth between conversation topics, yet this requires specific context action functionality from a conversational AI solution in order to keep up.

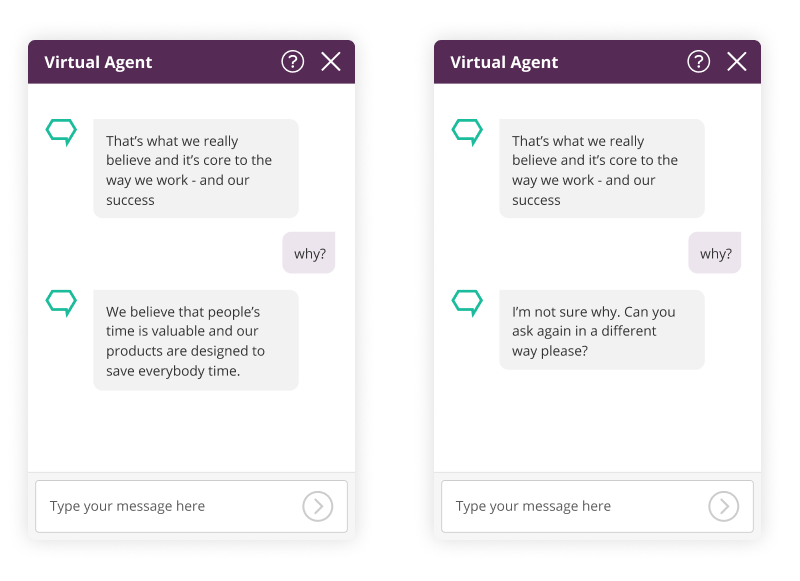

For example, a user may ask a question about boost.ai’s company values. They may then want to follow up for more information and ask ‘why’?:

- When we define the ‘Why?’ intent in context action a new action is defined and we tell the user why we believe people are key to the boost.ai values.

- Without context action, we trigger the same ‘Why?’ intent but give a boilerplate answer which is too general and doesn’t actually answer the question.

Recommendations

For companies interested in exploring what is possible with voice based conversational AI, Gartner recommends starting simultaneous pilots with both voice-enabled and text-based virtual agents to fully understand the opportunities and limitations of the technology.

We also present the following recommendations below as a guide for how to maximize your use of voice:

Keep content short and speech-friendly

It's crucial to customize content and replies for optimal speech integration. Typically, textual responses should be concise yet informative. They probably need to be briefer than in chats, with limited consecutive replies, to avoid prolonged spoken answers.

Clearly define your use case(s)

Start with one or two use cases to test the waters and gain experience on how to talk to your customers. Map out limited use cases that actually make sense for voice rather than throwing everything at the wall to see what sticks.

Context functionality is crucial

Make sure that your vendor has support for context actions. These are crucial to maintaining natural conversation flows with customers and looping them back around to your desired end goals.

Start with a strong language understanding core

Robust language understanding is a must if you want your voice use cases to work well. A strong foundational Natural Language Understanding (NLU) is mandatory, while additional proprietary technologies, such as boost.ai’s Automatic Semantic Understanding (ASU), hold the key to helping voice recognition technology reach its full potential.

ASU enables a deeper understanding of a customer’s overall intent, allowing conversational AI to understand what words in a request are important, and when. Through this technology, we are able to reduce the risk of false-positives to a minimum which is a fundamental part to ensuring that a voice bot can deliver the correct responses.